Workflow Suggestions for Music Collaborations

One of the most underestimated approaches to electronic music is collaboration. It seems to me that because of electronic music’s DIY approach people believe they need to do absolutely everything themselves. However, almost every time I’ve collaborated with others I hear them say “wow, I can’t believe I haven’t done that before!” Many of us want to collaborate, but actually organizing a in-person session can be a challenge. In thinking about collaboration and after some powerful collaboration sessions of my own, I noted what aspects of our workflow helped to create a better outcome. I find that there are some do’s and don’ts in collaborating, so I’ve decided to share them with you in this post.

Have a plan

I know this sounds obvious, but the majority of people who collaborate don’t really have a plan and will just sit and make music. While this works to some degree, you’re really missing out on upping the level of fun that comes out of planning ahead. I’m not talking about big, rigid plans, but more so just to have an idea of what you want to accomplish in a session. Deciding you’ll jam can be plan in-itself, deciding to work on an existing track could be another, or working on an idea you’ve already discussed could be a more precise plan.

Personally, I like to have roles decided for each person before the session. For example, I might work on sound design while my partner might be thinking about arrangements. When I work with a musician, I usually already have in mind that this person does something I don’t do, or does it better that I can. The most logical way to work is to have each participant take a role in which they do what they do best.

If you expect yourself to get the most of sound design, mixing, beat sequencing, editing, etc., all at once, you’re probably going to end up a “Jack of all trades, master of nothing”. Working with someone else is a way to learn new things and to improve.

A good collaborative session creates a total sense of flow; things unfold naturally and almost effortlessly. With that in mind, having a plan gives the brain a framework that determines the task(s) you need to complete. One of the rules of working in a state of flow is to do something you know you do well, but to create a tiny bit of challenge within it.

Say “yes” to any suggestions

This is a rule that I really insist on, though it might sound odd at first. Even though sometimes an idea seems silly, you should say yes to it because you’ll never know where it will lead you unless you try it. I’ve been in a session where I’ve constantly had the impression that I was doing something wrong because we weren’t following the “direction” of the track I had in my head. But what if veering off my mental path leads us to something new and refreshing? What if my partner – based on a suggestion that made have seemed wrong at first – accidentally discovered a sound we had no idea would fit in there?

This is why I find that the “yes” approach is an absolute win.

Saying yes to everything often just flows more naturally than saying no. However, if the “yes” approach doesn’t work easily, don’t force it; it’s much better to put an idea aside and return to it another day if it’s not working.

Trust your intuition; listen to your inner dialogue

When you work with someone else, you have another person who’s also hearing what you’re hearing, and will interact with the same sounds and try new things. This new perspective disconnects you from your work slightly and gives you a bit of distance. If you pay attention, you’ll notice that your inner dialogue may go something like “oh I want a horn over that! Oh, lets bring in claps!” That inner voice is your intuition, your culture, and your mood, throwing out ideas; sharing these ideas with one another can help create new experiments and layers in your work.

Combining this collaborative intuition with a “yes” attitude will greatly speed up the process of completing a track. Two people coming up with ideas for the same project often work faster and better than one.

Take a lot of breaks

It’s easy to get excited when you’re working on music with another person, and when you do, some ideas might feel like they’re the “best new thing”, but these same ideas could actually be pretty bad. You need time away from them to give yourself perspective; take breaks. I recommend pausing every 10 minutes. Even pausing for a minute or two to talk or to stand up and stretch will make a difference in your perceptions of your new ideas.

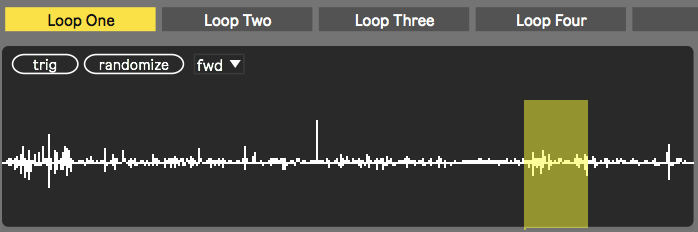

Centralize your resources

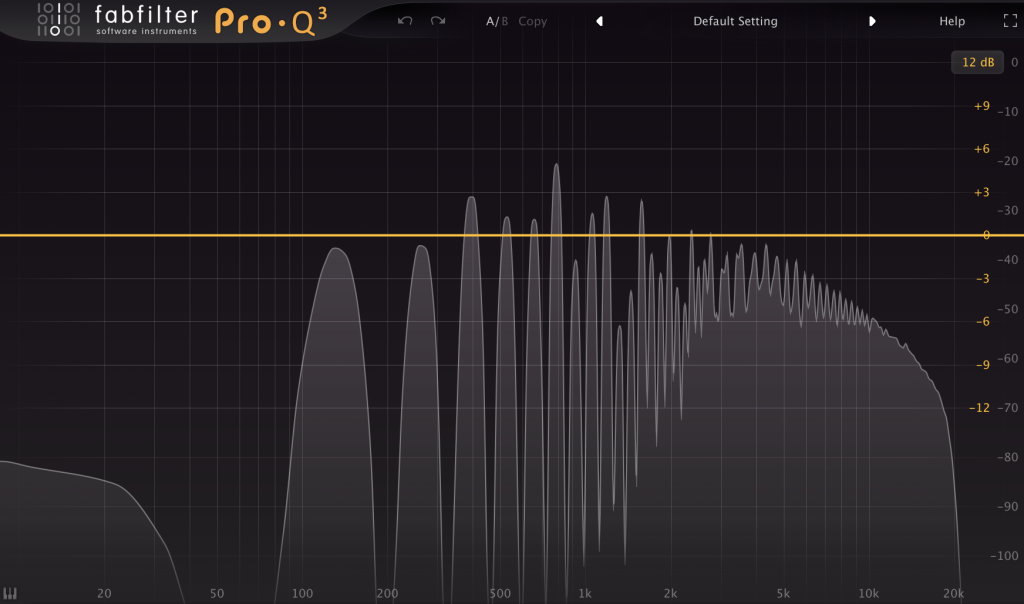

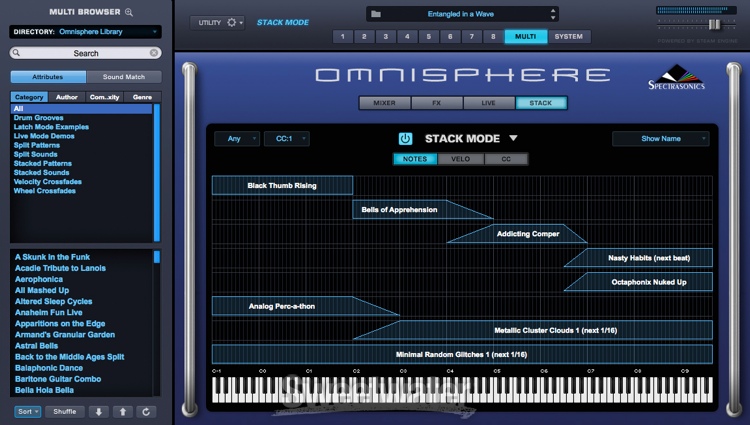

In collaborating, when you reach the point of putting together your arrangements, I would say that it’s important to have only one computer as the main control station for your work. Ideally you’d want an external hard-drive that you can share between computers easily; this way you can use everyone’s plugins to work on your sounds. One of the most useful things about teaming up with someone else is that you get access to their resources, skills, materials, and experience. Make sure to get the most out of collaborating by knowing what resources you can all drawn upon, and then select a few things you want to focus your attention on. It’s easy to get distracted or to think you need something more, but I can tell you that you can do a lot with whatever tools you have at that moment. Working with someone else can also open your eyes to tools you perhaps didn’t fully understand, were not using properly, or not using to their full potential.

Online collaboration is different

Working with someone through the internet is a completely different business that working together in-person. It means that you won’t work at the same time and some people also work more slowly or more quickly than yourself. I’ve tried collaborating with many people online and it doesn’t always work. It takes more than just the will of both participants to make it work, it demands some cohesion and flexibility. All my previous points about collaborating in-person also apply to collaborating online. Assigning roles and having a plan really helps. I also find that sharing projects that aren’t working for me with another person will sometimes give them a new life.

If you’re a follower of this blog, you’ll often read that one of the most important things about production that I stress is to let go of your tracks; this is something very essential in collaborating. I usually try to shut-off the inner voice that tells me that my song is the “next hit” because thinking this way usually never works. No one controls “hits”, and being aware of that is a good start. That said, when you work with someone online, since this person is not in the room with you and he/she might work on the track while you’re busy with something else, I find works best to be relaxed about the outcome. This means that if I have a bad first impression with what I’m hearing from the person I’m working with, I usually wait a good 24h before providing any feedback.

What if you really don’t like what your partner is making?

Not liking your partner’s work is probably the biggest risk in collaborating. If things are turning out this way in your collaboration, perhaps you didn’t use a reference track inside the project, or didn’t set up a proper mood board. A good way to avoid problems in collaboration is to make sure that you and your partner are on the same page mentally and musically before doing anything. If you both use the same reference track, for example, it will greatly help to avoid disasters. If you don’t like a reference track someone has suggested, I recommend proposing one you love until everyone agrees. If you and your partner(s) never agree, don’t push it; maybe work with someone else.

The key to successful collaborations is to keep it simple, work with good vibes only, and to have fun.

SEE ALSO : Synth Basics