Pheek’s Guide To Making Dub Techno

I think making Dub Techno is one of the most requested blog posts I have been asked to do, and for years, I resisted it. I think there was some sort of shyness and perhaps, a lack of technical vocabulary on where to begin when teaching others how to make Dub Techno. But I think it’s time to take a chance and open up on all the ideas I compiled about my beloved Dub Techno direction.

This post won’t necessarily explain how to do the typical dub techno. While I’ll cover some of the most asked questions about it, I want to expand on the philosophy and aesthetic itself so you can take the best part of it and merge it into how you work.

Origins of Dub Techno

Before we get into how to make dub techno, it’s very important to me to honor the artists who were behind the genre and to talk about where the genre started. For this, there are some nice videos by Dub Monitor. There are these 2 videos that explain the origins of dub techno better than I could about how the genre started and how it developed.

Dub techno is a subgenre that emerged from the fusion of two influential musical styles: dub and techno. Dub music itself has its origins in Jamaica in the late 1960s, with pioneers like King Tubby and Lee “Scratch” Perry. Dub music is characterized by its heavy use of effects, echo, reverb, and the manipulation of existing tracks, often stripping away vocals to emphasize the rhythm and instrumental elements.

The Techno Connection: Techno, on the other hand, had its beginnings in Detroit in the early 1980s, with artists like Juan Atkins, Derrick May, and Kevin Saunderson. Techno is known for its repetitive beats, synthetic sounds, and a futuristic, often industrial, aesthetic.

The Emergence of Dub Techno: Dub techno began to take shape in the early 1990s when electronic musicians started experimenting with the fusion of dub’s spacious and echo-laden soundscapes with the rhythmic patterns and synthetic textures of techno, thus making dub techno. The result was a genre that retained the hypnotic beats of techno but incorporated the atmospheric and dub-infused elements.

Basic characteristics to consider while making dub techno: Dub techno is characterized by a few key elements:

- Reverberating Soundscapes: Dub techno producers use extensive reverb and delay effects to create deep and immersive sonic environments. These effects give the music a sense of spaciousness and depth.

- Minimalism: Similar to techno, dub techno often relies on minimalistic compositions with a focus on repetition. The use of minimal elements allows for a meditative and trance-inducing quality.

- Subdued Rhythms: While techno can have a pounding and relentless rhythm, dub techno tends to have more subdued and laid-back beats. The rhythm is often more relaxed and groovy.

- Incorporation of Dub Techniques: Dub techno incorporates dub’s signature techniques like echo, dropouts, and phase shifting to create a sense of movement and exploration within the music.

Notable Pioneers: Some of the early pioneers of dub techno include Basic Channel, a German duo consisting of Moritz von Oswald and Mark Ernestus, and their various aliases like Maurizio and Quadrant. These artists were instrumental in shaping the genre and creating its distinctive sound.

Global Influence: Dub techno’s influence quickly spread beyond Germany, with artists and labels from around the world embracing the genre. Labels like Chain Reaction and Echocord played a significant role in promoting the making of dub techno, and artists from countries like Sweden, Finland, and Japan contributed to its global appeal.

Obsessing on the “How-To” While Making Dub Techno

Over the last 25 years, I’ve come upon multiple and countless discussions online about how the genre is made. People would discuss what piece of equipment was used and be obsessed with recreating the original sound. While this is a state of mind that I totally get – because I also get obsessed about how certain sounds are made – I can’t help myself asking why would you want to redo the exact same results. In a way, it explains why the genre never died in the last decades. There are always people who keep making dub techno.

I think there are a few motivations to join the sound of dub techno. On one side I see it as a self-soothing experience and on the other, by passion, to join others who also make it.

But my take is that people are puzzled about how something that sounds so simple can actually be so mind-boggling to do.

The Main Aspects Of Making Dub Techno

I’d like to cover multiple techniques and strategies to infuse your music with the dub techno approach while also making sure we apply certain tweaks that can make your music have a similar aesthetic.

The first thing to explain is that there are 3 main categories to consider while making dub techno:

- Sound Design

- Modulation

- Colour.

Dub techno has its own touch and sound which will be explored below.

Dub Techno sound design

One of the main characteristics of dub techno comes from the pads and stabs that are fuzzy, melancholic, and enigmatic. In itself, those pads aren’t necessarily that complex to do. I found numerous tutorials on YouTube and have 3 of the ones I prefer. I find they’re well explained and show in similar ways, how to reproduce them.

How To Make Dub Techno Chords

As you see in those tutorials, the way the synth is configured, is rather simple – it’s usually one chord that repeats, but it has specific modulation and color, as I explained earlier. Once you start experimenting with this pad, you’ll already be in business and have the basis for making dub techno.

But honestly, when I found out how to do it, I thought it would make more sense to be inspired by those techniques but to go a bit deeper into the sound design.

In my past dub albums Tones of Void, Intra, and White Raven, I basically used a bunch of synths but kept them very dark in tone (eg. lower notes around 1-3 octaves) with not too many harmonics (eg. filtered). Once you understand that any synths can do that, you won’t be limited to the classic sound of dub techno.

How to make dub techno melodies

When it comes to melody-making, there are multiple approaches. There’s the classic one note (yes, one note) from the old school dub techno (Basic Channel, Chain Reaction) and there is also a more structured approach (Pablo Bolivar, Yagya), almost pop-oriented dub techno. Both work but the harmonic nature of the melodies are often in a minor key, with the root key of D being often a popular choice.

How to make dub techno bass

While making dub techno, bass is often, also very simple, with it being than often than not a one-note thing. Simply using sine oscillators and pushing them forward in the mix will often be the aesthetic of dub.

Dub Techno synth options

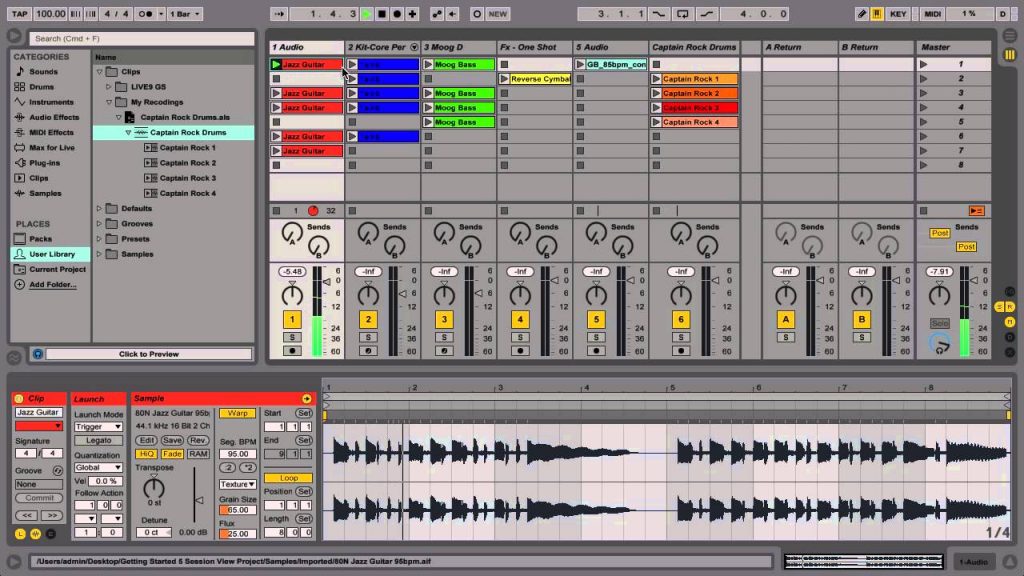

As we saw in the 3 videos, dub can easily be created in any context with any soft synth. Over the years, I have tried and tested many of them. While the native plugins of Ableton can do the job, VCV has been my playground but it’s not for everyone even if it’s free and there are so many tutorials out there. Here are some of the soft synths people love.

- Diva: So many tracks I hear in mastering use Diva. It has a distinct sound but in a good way. It sounds warm, and lush, and is close to some hardware options. It’s pricy but you get that sound we love.

- Pigments: Pigments is versatile, open, powerful, and extremely creative. There is a huge playground here with the option of a preset store within the synth itself. You need to work a bit to get the dub sound but it sounds nice.

- TAL-U-NO-LX Synth: Juno has been used over the years as a default synth for dub techno, mainly the chords. This synth option works well and is close to the real thing.

- Go2: Cheap in price but with big results. Even the presets will give you some nice options to start with. I love this one.

- Blue-III: Rob Papen again and this one is deep. Not for the beginner as you can easily get lost in it but the sounds you get are very impressive.

- Prophet VS-V: Not many people know but it was said that the Prophet VS was what the early guys of Chain Reaction were using. When there was a VST version of it, we were all drooling. While it is very powerful and nice, it is not the easiest to program. But the sound is very impressive.

- Prophet 5: This is a synth we all used for years in the early 2000s when we wanted the dub sound. It aged so well and it’s fun to use. You get tons of options for synths, pads, and stabs. Recommended and often on sale.

- Orange Vocoder: This is not a synth per se, as it is of course, a vocoder but this was used by so many people as you can basically throw any sound in it and this plugin will turn it into a lush synth sound. Really powerful and a nice alternative to just synths.

Dub is a vibe and an aesthetic, not a bible. You take the aesthetic and apply it to any sound.

Once you pick your aesthetic, you can apply the concept to any synths you have.

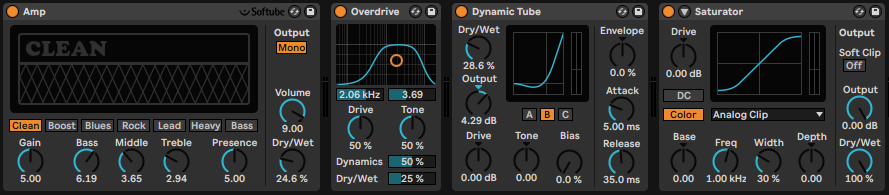

What you need is to create and amplify your harmonics with saturation (tube and tape do great), then have a colored filter and sent to delay and reverb for cosmetics. This means that if you have any synth of yours, you can make it dirty with a saturation and then filter it. The reverb will then do the trick.

What will make a difference is to juggle with the most genres of saturation: distortion, amp and saturation itself. Using a combination of the 3 will bring really lovely colours but be careful not to overcook your sounds. Keep in mind that in mastering, it will be boosted so what sounds like a pleasant distortion can become overwhelming later on.

Some of my saturation go-tos are below.

Saturation plugins used in making dub techno

- Surge XT: (FREE!) This is a collective of developers who managed to create a badass synth and free, high-quality, effects. Their Chow Tape is quite amazing but also all other distortion/saturation tools. A must-have and hey, it’s free.

- RC-20: This one came in popular in the lofi hip-hop community as a de facto plugin to have. It adds lofi vibes but this is an element dub techno also has so the cross use is totally on point.

- Reamp: The guys behind this are very solid and this one has a beautiful series of plugins which are all very solid. I like the colour this one has.

- Saturn 2: Anything Fabfilter is a leader in its domain. Saturn is not an exception, as it is very good at what it does, which is to make anything too pretty, a bit uglier, dirtier.

- PSP Saturator: PSP is one of my favorite company for their plugins. I love their EQs and compressor and this saturator does a great job on pads.

- Satin: This is such a handy tool here. It is tape saturation/simulation, but also a tape delay which can create weird reverbs and wobbly signals. Once you start using it, you’ll be using it all the time.

Reverb plugins for dub techno

While Ableton’s reverbs option can do the job, I always rely on third-party VST for that part. It’s hard not to mention Valhalla plugins for this. The Supermassive is free and the Digital Reverb is sort of a perfect match for dub. If you have to pick, I would recommend experimenting with “plate” models and some use of a “hall” as well for sustained notes.

Whatever you do, to me, Dub Techno really starts with a heavy use of reverb, which has to be modulated, filtered, and distorted. Understanding how to use your reverb and combining it with a delay will ensure that you have a proper dub mood. If you pick the reverb properly, you almost could say that you have 50% of your job done. The rest are the sum of a lot of details but when you have your reverb done right, you’ll automatically feel you’re making dub.

Anyone who knows me has heard my affectionate passion for reverbs. I compiled some of my favorites for Dub Techno.

The saturation tools native to Ableton aren’t too bad but can be recognizable easily by an educated ear.

- Lexicon 224: I’m a big fan of Lexicon. It has a character and tone that I love. Not sure what it is, but the grain and how it feels just does it for me.

- Springs: Spring reverb is a type that makes sounds sent through it, sound liquid. This works well with percussive sounds and you’ll feel like some classic dub vibes.

- Fabfilter Pro-R: This one is amazing for spaces. It is a powerful tool to shape grandiose halls and give tremendous space.

- Adaptiverb: There’s different tools on that ones that makes it unique. It has a big array of presets that are tuned to a root key, which can create pads out of unusual sounds. Quite unique.

- SP2016: I call this one a Cadillac of reverbs. It’s elegant, warm, very ear pleasant and very visual. I feel immersed when using it.

- bx_rooms: Extremely versatile but the interface can be intimidating. It has lovely options for different room types.

- Blackhole: This one is spooky, deep, powerful. It is a reverb that makes you go in space as it sounds pretty sci-fi, rich, and gigantic sometimes.

Now reverbs for dub are essential but you’ll need delays as well. You can either use long delays or short. There’s no right or wrong but the use of delays helps you take very simple sounds and create repetition, which transforms the straight-forward pattern into psychedelic equations. Delays, combined with reverb, create a thick background and will make any sound – which feel empty at first – fill with a velvety, dreamy carpet. I think for a lot of fans of the genre, it’s a quality they’re after.

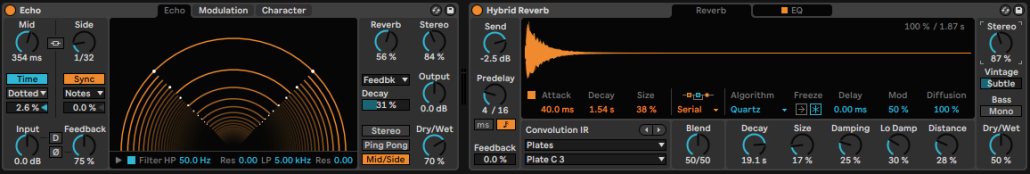

Echo and Hybrid Reverb in Ableton. They can do a long run if you don’t want to break your piggy bank.

Additional plugins for making dub techno (delays, pitch modulators, etc).

- Diffuse: These guys are dub lovers and this tool here is a go-to for reverb/delay as it’s an emulation of the famous Roland Space Echo which was in so many studios.

- Modnetic: Same guys as above. This one is a combination of all your need in one place to turn a single, boring sound, into a dub tune.

- Echorec: The guys at Pulsar are very competent at recreating hardware toys and they created a tape delay with self-oscilating, magnetic fields and all you wish for in a dirty delay.

- Galaxy Tape Echo: This is UAD’s recreation of the Roland Space Echo and it is really well done.

- Tal Dub-X: As the name implies, this is a station with all the options to turn a simple delay into a modulated one.

- Echo Cat: Another beautiful emulation of a tape delay. But a really solid one.

- PSP 42: Popularized by Richie Hawtin in the early 2000s, where he’d loop-delay sounds and pitch them up/down, the PSP42 was used abusively in all his sets for years. Rich was basically doing dub techniques in his own way.

Modulation in dub techno

If you just take any synth sounds and send it to your effect chains, you have done the first step but it won’t be complete until you make it move, react, and evolve into modulations. There is a lot to take in in this section because this is also one of the most discussed topics in my blog – I have covered it inside out already but you now know why, because Dub Techno is all about modulations. Once you dip your toe in those waters, you’ll become excited about it and apply it everywhere.

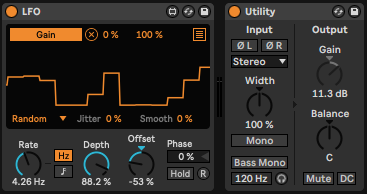

If you watched those tutorials on how they make the dub pads and chords, you’ll see that they use modulation on the filter. There is both use of an envelope and LFO to modulate the frequency of the filter but also its resonance. That’s just the tip of the iceberg to me. If there is a parameter on a plugin, I like to think that it shouldn’t remain static and have it move, even a little bit.

But of course, a lot of this can be handled by my favorite “Swiss army knife”, Shaperbox which is designed for modulation on all levels. A must-have.

When to use envelopes and LFOs when making dub techno

Well, if it’s a modulation that is reacting to an incoming signal such as when a sound comes in, I want the filter to react, then you’ll use an envelope. That kind of modulation is excellent for accentuating or attenuating sounds, creating a more organic feel to the processed sound.

If you want constant movement, LFOs are excellent for that. They just move to the tempo or not. They give the illusion that things are constantly on the go and help blur the lines of linear arrangements.

There’s one precious bundle that I love from Make Noiss that has so many little tools, perfect for modulation and midi signal processing. Not to forget my friends at Manifest Audio and their large array of max patches as well that are perfect for modulation but they also curated many racks for dub.

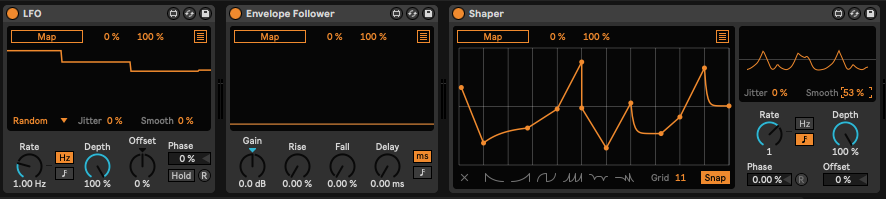

The 3 Amigos are here to turn a static idea into an animated figure.

Colours of Dub Techno

I know you might be confused by colour here as we discussed of saturation as a form of colour but this is the last touch. The colours here are from different sources else than saturation and also, very complementary. What I’m referring to are the effects of the chorus, phaser, flanger, tremolo, vibrato, auto-pan, harmonizer, wobbler, and also, one of the most important parts which is the hiss. Apart from that last one, all those effects are often heavily used in dub and it’s quite a nice touch to pick one or 2 on your sounds.

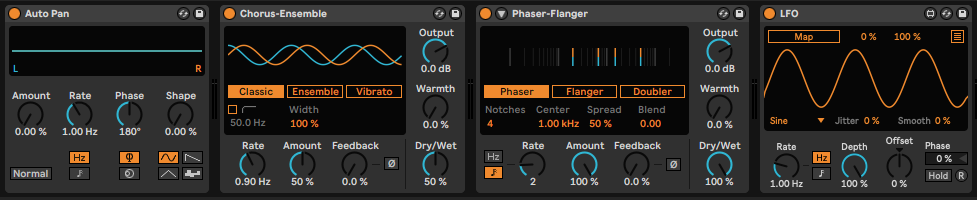

These guys are a lot of fun and sound pretty lovely.

Chorus, phaser, and vibrato

Chorus, phaser, and vibrato work really well with synths, pads, stabs, and chords. They give this engaging, trippy, stereo effect that quite often, makes a dull sound jump out of the mix. Keep an eye to make sure you don’t get phasing issues which would be an overuse of one of those effects. Phasing is quite common in dub and I often fix those issues in mastering. It’s better to control it when decorating your mix.

Flanger

Flanger gives this jet-sound feel to anything. It brings pfshhh sound to metallic or noisy sounds and can be quite psychedelic if used at a low level. I like it on hats and delays.

Tremolo

Tremolos are sort of a secret sauce that everyone underuses. It’s basically a slow or fast modulation of the amplitude of a sound. It is a superb tool for creating 3D feel where you feel sounds go away from you and come back. It turns anything linear into a lively, feeling motion. At a faster speed, it can even be used as a swing/velocity for percussion. Combine it with an auto-pan and you have head spinning spaced out moments.

The hiss part is quite important as well. The noise floor is something deep in the DNA of dub. There are multiple noise makers. You can dig the internet for noise sources, recordings or noise-making tools (RC-20). Satin has a nice hiss that you can use as well.

Conclusion

Making dub techno should be a playground of experimentation. It’s a genre that I approach with a very open mind and so do many other fans as well. While often people feel like they’re just repeating the clichés and perhaps nothing new comes out of it, then I’d say, dig deeper. There are some gems from people who push the boundaries of the genre.

5. Random/Noise Wave: Chaotic and Experimental

5. Random/Noise Wave: Chaotic and Experimental As of my last knowledge update in September 2021, Ableton Live’s “Binary” form might refer to a specific device, feature, or concept that was introduced after that time. However, if we’re discussing a feature related to binary operations or manipulation, here’s a general explanation of how binary operations might be used in a music production context:

As of my last knowledge update in September 2021, Ableton Live’s “Binary” form might refer to a specific device, feature, or concept that was introduced after that time. However, if we’re discussing a feature related to binary operations or manipulation, here’s a general explanation of how binary operations might be used in a music production context: